CAP Theorem Simplified

Consistency, availability, and partition tolerance are the three major goals of distributed system design, and it is impossible to achieve all three at the same time.

In this blog, we’ll understand what these are and how you can use this to design your system and what are the tradeoffs.

What is CAP THEOREM?

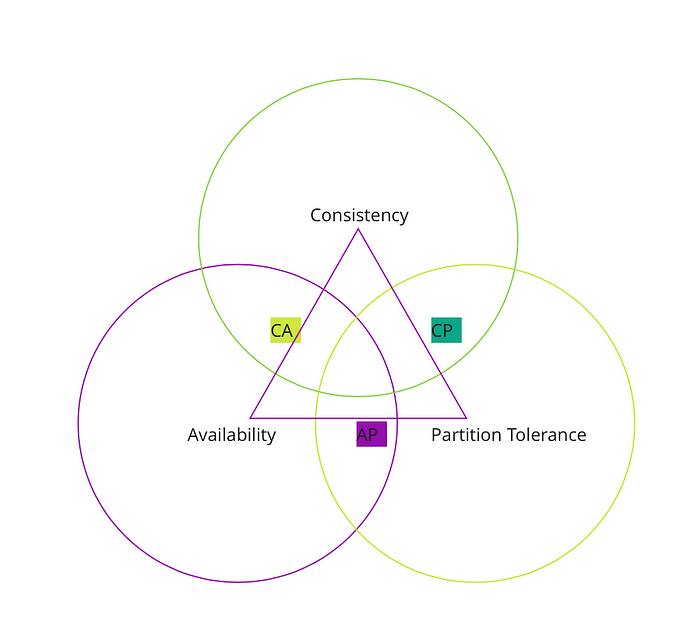

CAP theorem, also known as Brewer’s theorem, is a concept in computer science that states that it is impossible for a distributed data store to simultaneously provide more than two out of the following three guarantees:

- Consistency: Every read receives the most recent update or an error.

- Availability: Every request receives a response, without the guarantee that it contains the most recent write.

- Partition tolerance: The system continues to operate despite an arbitrary number of messages being dropped (or delayed) by the network between nodes.

Let’s try to understand each of these with an example

Consistency

Consistency means that data is consistent across all nodes and each node have most recent data. Consistency is important when you are dealing with money where you need to have consistency and you can’t afford to have any latency between nodes for updating data.

Think of it as what will happen if one ATM shows you balance as 1000 $ and other shows you 1200 $. This will be chaos. When you visit an ATM, either you are able to make transactions (that means data is consistent and server is upto date with recent write) or the ATM is temporary down (that means server is down because of the inconsistency).

Consistency is important in a distributed system because it ensures that all nodes in the system have a consistent view of the data. This is especially important in systems where multiple users are updating the same data concurrently, as it ensures that each user sees the updates made by the other users.

However, consistency can come at a cost. If the system is designed to prioritize consistency, it may sacrifice availability and partition tolerance.

This means that the system may become unavailable if there is a network partition or if a node fails, and it may not be able to handle a high volume of requests.

Availability

Availability means that system will be always responds to your requests, even if the response is not the most recent write.

In a distributed system, availability is important because it ensures that the system is able to handle a high volume of requests and is able to serve users even if some nodes or parts of the system are experiencing issues.

However, like consistency, availability can come at a cost. If the system is designed to prioritize availability, it may sacrifice consistency and partition tolerance. This means that the system may return outdated or inconsistent data to clients, or it may not be able to handle a network partition.

Imagine that you are building a database for a healthcare organization. The database stores patient medical records and needs to be available to healthcare providers at all times.

In this scenario, availability is critical because healthcare providers need to be able to access patient medical records at any time, day or night. If the database is not available, healthcare providers may not be able to access important information, which could impact patient care.

To ensure high availability, the database might be designed to automatically fail over to a backup server if the primary server goes down. This ensures that the database remains available to healthcare providers even if one of the servers experiences an issue.

Partition Tolerance

Partition tolerance refers to the ability of a distributed system to continue operating even if there are issues with the network that cause messages to be dropped or delayed between nodes.

This is especially important in systems where multiple users are updating the same data concurrently, as it ensures that the system can continue to process updates and serve requests even if there are network issues.

However, like consistency and availability, partition tolerance can come at a cost. If the system is designed to prioritize partition tolerance, it may sacrifice consistency and availability. This means that the system may return outdated or inconsistent data to clients, or it may become unavailable if there is a network partition.

In distributed systems, it is impossible to guarantee all three. So there is always a tradeoff between availability and consistency. A system can be consistent but lacks availability or a system can be available but lacks consistency.

When designing your next application, you need to keep in mind the benefits and tradeoffs that comes with distributed design. Things works well when you have one server and one database, but things get interesting when you scale and you get to know the exact meaning of stable and durable system design.

Let’s take a look at few of the database that fall under this :

In conclusion, the CAP theorem is a fundamental principle of distributed systems that states that it is impossible for a system to simultaneously provide all three of the following guarantees: consistency, availability, and partition tolerance. Instead, a distributed system must make trade-offs between these three guarantees, depending on the specific requirements of the application and the needs of the users.

So when you are going to design your next system, remember these tradeoffs.

If you like this blog and would like to read more of these, checkout my other blogs:

- Deep dive into System design

- Most commonly used algorithms.

- Consistent Hashing

- Bit , Bytes And Memory Management

Other Life changing blogs for productivity and Focus: